kubernetes-solutions

Kubernetes Templates

1. Network Templates

1. Allow/Deny all ingress traffic

-

If you want to allow all incoming connections to all pods in a namespace, you can create a policy that explicitly allows that.

-

similarly selects all pods but does not allow any ingress traffic to those pods.

2. Allow/Deny all egress traffic

-

If you want to allow all connections from all pods in a namespace, you can create a policy that explicitly allows all outgoing connections from pods in that namespace.

-

Similarly selects all pods but does not allow any egress traffic from those pods.

3. To restrict access to Kubernetes pods based on IP addresses

Network Policies allow you to define rules to control traffic to and from pods. Define a Network Policy: Create a Network Policy manifest file specifying the desired ingress rules to restrict access based on IP addresses.

cidr: Specifies the allowed IP CIDR range. Only traffic from IPs within this range will be allowed.

except: Optionally, you can specify exceptions to the allowed CIDR range.

2. Deployment Templates

1. Create the dependency deployment

using a initContainer, which is just another container in the same pod thats run first, and when it’s complete, kubernetes automatically starts the [main] container.

initContainers:

- name: wait-for-main-app

image: busybox

command:

[

"sh",

"-c",

"until wget -qO- main-application:8080/healthz; do sleep 5; done",

]

containers:

- name: main-app

using netcat to check for open ports

initContainers:

- name: wait-for-services

image: busybox

command: ["/bin/sh", "-c"]

args:

[

"until echo 'Waiting for postgres...' && nc -vz -w 2 postgres 5432 && echo 'Waiting for redis...' && nc -vz -w 2 redis 9000; do echo 'Looping forever...'; sleep 2; done;",

]

2. Sidecar container

Sidecar containers are auxiliary containers that run alongside the main application container within the same Kubernetes Pod. They provide additional functionalities such as logging, monitoring, or handling specific tasks without affecting the primary application

3. Graceful Pod Shutdown with PreStop Hooks

PreStop hooks allow for the execution of specific commands or scripts inside a pod just before it gets terminated. This capability is crucial for ensuring that applications shut down gracefully, saving state where necessary, or performing clean-up tasks to avoid data corruption and ensure a smooth restart.

When to Use: Implement PreStop hooks in environments where service continuity is critical, and you need to ensure zero or minimal downtime during deployments, scaling, or pod recycling.

This configuration ensures that the nginx server has 30 seconds to finish serving current requests before shutting down.

spec:

containers:

- name: nginx-container

image: nginx

lifecycle:

preStop:

exec:

command: ["/bin/sh", "-c", "sleep 30 && nginx -s quit"]

4. Mount the configmap to the deployment environment variable

We can achive it using configMapKeyRef

env:

- name: MY_GREETING

valueFrom:

configMapKeyRef:

key: greeting

name: test-cm

3. Service Templates

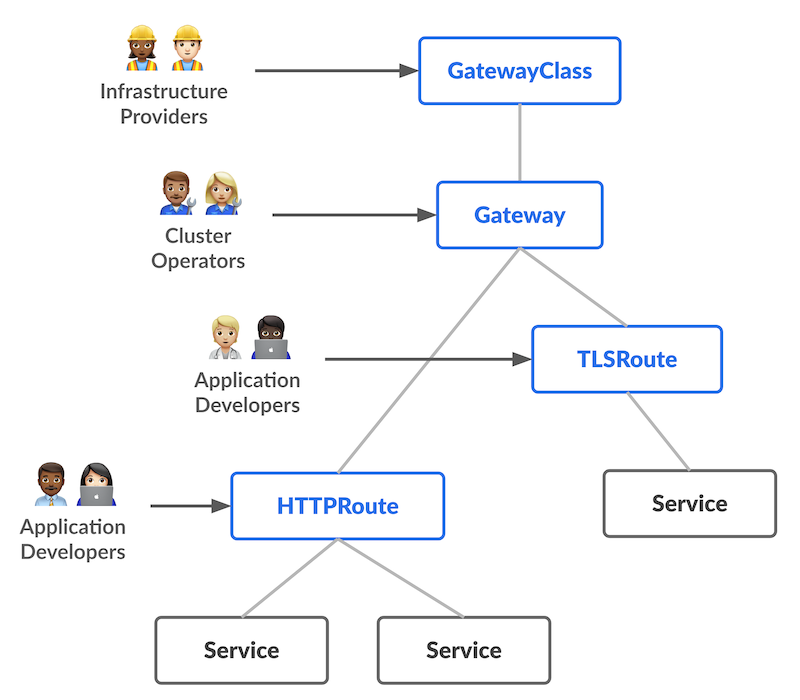

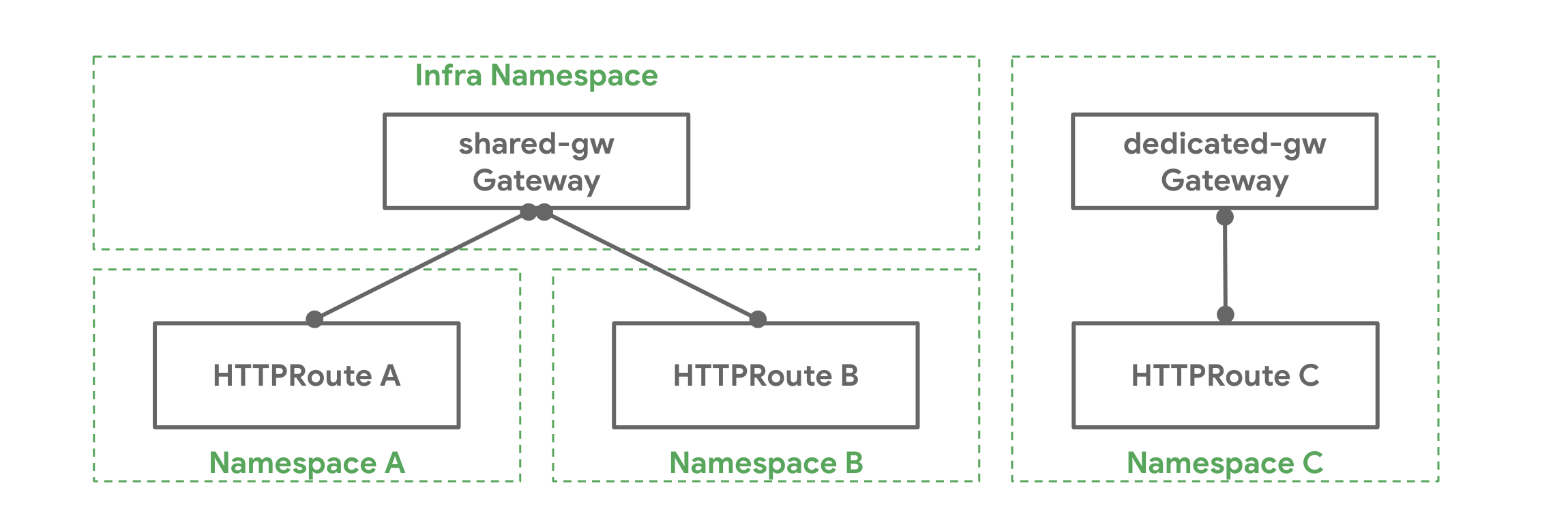

1. Gateway API Service

Gateway API is an official Kubernetes project focused on L4 and L7 routing in Kubernetes. This project represents the next generation of Kubernetes Ingress, Load Balancing, and Service Mesh APIs. From the outset, it has been designed to be generic, expressive, and role-oriented.

How it Works¶

The following is required for a Route to be attached to a Gateway:

- The Route needs an entry in its parentRefs field referencing the Gateway.

- At least one listener on the Gateway needs to allow this attachment.

Gateway API offers a more advanced and flexible approach to managing ingress and egress traffic within Kubernetes clusters, while Ingress provides a simpler and more basic method for routing external traffic to services. Gateway API is intended to replace Ingress and provide a standardized way to manage networking resources in Kubernetes environments.

2. Service Internal Traffic policy

The Service Internal Traffic Policy in Kubernetes is primarily used to enhance network security and control the flow of traffic within the cluster

Isolation of Internal Services: In a microservices architecture, different services may communicate with each other within the cluster. By setting the Service Internal Traffic Policy to “Local”, you can ensure that internal services are only accessible via their ClusterIP, limiting direct access via NodePort and enhancing network segmentation.

4. Advanced Template Details

1. ResourceQuota and Limit Range

LimitRange sets constraints on the amount of resources (like CPU and memory) that can be requested or used by containers in a namespace. It helps ensure that no single container hogs all the resources. ResourceQuota, on the other hand, is like a budget for a namespace, limiting the total resources that can be consumed within it. This ensures fair resource distribution across different teams or applications.

For example, a resource quota can be set to limit the total amount of CPU and memory that can be consumed by all pods within a namespace. This helps prevent one application from monopolizing cluster resources and affecting the performance of other applications running in the same namespace. Similarly, resource quotas can limit the number of pods or services that can be created to avoid overloading the cluster.

2. Operator Groups

An OperatorGroup in Kubernetes is a resource used to manage the deployment and scaling of operators within a cluster. It allows administrators to specify which namespaces an operator should be deployed to and which RBAC (Role-Based Access Control) rules should be applied to the operator.

The primary use of OperatorGroup is to define a logical grouping of namespaces where a particular operator should be deployed. This helps in organizing and managing operators across different namespaces within a Kubernetes cluster. By associating an operator with an OperatorGroup, you can control which namespaces the operator has access to and which resources it can manage.

3. Horizontal Pod Autoscaling

Based on Custom Metrics:

Horizontal Pod Autoscaler (HPA) can scale your deployments based on custom metrics, not just standard CPU and memory usage. This is particularly useful for applications with scaling needs tied to specific business metrics or performance indicators, such as queue length, request latency, or custom application metrics

Based on System Metrics:

Horizontal Pod Autoscaler for an application, ensuring that it scales out when the CPU utilization goes above 50% and scales in when the usage drops, between a minimum of 3 and a maximum of 10 replicas.

4. Node Affinity for Workload-Specific Scheduling

Node affinity allows you to specify rules that limit which nodes your pod can be scheduled on, based on labels on nodes. This is useful for directing workloads to nodes with specific hardware (like GPUs), ensuring data locality, or adhering to compliance and data sovereignty requirements.

When to Use: Use node affinity when your applications require specific node capabilities or when you need to control the distribution of workloads for performance optimization, legal, or regulatory reasons.

5. Pod Priority and Preemption for Critical Workloads

Kubernetes allows you to assign priorities to pods, and higher priority pods can preempt (evict) lower priority pods if necessary. This ensures that critical workloads have the resources they need, even in a highly congested cluster.

When to Use: Use pod priority and preemption for applications that are critical to your business operations, especially when running in clusters where resource contention is common.

6. Custom Resource Definitions (CRDs) for Extending Kubernetes

CRDs allow you to extend Kubernetes with your own API objects, enabling the creation of custom resources that operate like native Kubernetes objects. This is powerful for adding domain-specific functionality to your clusters, facilitating custom operations, and integrating with external systems.

When to Use: CRDs are ideal for extending Kubernetes functionality to meet the specific needs of your applications or services, such as introducing domain-specific resource types or integrating with external services and APIs.

Then a new namespaced RESTful API endpoint is created at:

/apis/stable.example.com/v1/namespaces/*/crontabs/...

This endpoint URL can then be used to create and manage custom objects. The kind of these objects will be CronTab from the spec of the CustomResourceDefinition object you created above.

apiVersion: "stable.example.com/v1"

kind: CronTab

metadata:

name: my-new-cron-object

spec:

cronSpec: "* * * * */5"

image: my-awesome-cron-image

kubectl get ct -o yaml

7. Pod Disruption Budgets

Pod Disruption Budgets (PDBs) help ensure that a minimum number of pods are available during voluntary disruptions, such as node maintenance. This ensures high availability without over-provisioning resources.

8. Cluster Autoscaler

On AWS, Cluster Autoscaler utilizes Amazon EC2 Auto Scaling Groups to manage node groups. Cluster Autoscaler typically runs as a Deployment in your cluster.

Using Mixed Instances Policies and Spot Instances:

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/instance-type

operator: In

values:

- r5.2xlarge

- r5d.2xlarge

- r5a.2xlarge

- r5ad.2xlarge

- r5n.2xlarge

- r5dn.2xlarge

- r4.2xlarge

- i3.2xlarge

Cluster Auto Scaling policy:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"autoscaling:DescribeAutoScalingGroups",

"autoscaling:DescribeAutoScalingInstances",

"autoscaling:DescribeLaunchConfigurations",

"autoscaling:DescribeScalingActivities",

"autoscaling:DescribeTags",

"ec2:DescribeLaunchTemplateVersions",

"ec2:DescribeInstanceTypes",

"ec2:DescribeLaunchTemplateVersions",

"ec2:GetInstanceTypesFromInstanceRequirements",

"eks:DescribeNodegroup"

],

"Resource": ["*"]

},

{

"Effect": "Allow",

"Action": [

"autoscaling:SetDesiredCapacity",

"autoscaling:TerminateInstanceInAutoScalingGroup"

],

"Resource": ["*"]

}

]

}

IAM role:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "ec2.amazonaws.com"

},

"Action": "sts:AssumeRole"

},

{

"Effect": "Allow",

"Principal": {

"Federated": "${providerarn}"

},

"Action": "sts:AssumeRoleWithWebIdentity",

"Condition": {

"StringEquals": {

"${clusterid}": "system:serviceaccount:kube-system:cluster-autoscaler"

}

}

}

]

}

Source Ref:

OIDC and roles : https://github.com/kubernetes/autoscaler/blob/master/cluster-autoscaler/cloudprovider/aws/CA_with_AWS_IAM_OIDC.md

Autoscaler Document : https://github.com/kubernetes/autoscaler/blob/master/cluster-autoscaler/cloudprovider/aws/README.md

9. Admission Controller

An admission controller is a piece of code that intercepts requests to the Kubernetes API server prior to persistence of the object, but after the request is authenticated and authorized

Why do I need them?

Several important features of Kubernetes require an admission controller to be enabled in order to properly support the feature. As a result, a Kubernetes API server that is not properly configured with the right set of admission controllers is an incomplete server and will not support all the features you expect.

- To Enable

kube-apiserver --enable-admission-plugins=NamespaceLifecycle,LimitRanger ...

- To Disable

kube-apiserver --disable-admission-plugins=PodNodeSelector,AlwaysDeny ...

- plugin enabled

kube-apiserver -h | grep enable-admission-plugins

5. Ingress Templates

Click to expand/collapse

### 1.6. Persistent Volume Templates

Click to expand/collapse

### 1.7. RBAC

RBAC enforces fine-grained access control policies to Kubernetes resources, using roles and role bindings to restrict permissions within the cluster.

If you want to define a role within a namespace, use a Role; if you want to define a role cluster-wide, use a ClusterRole.

A role binding grants the permissions defined in a role to a user or set of users. It holds a list of subjects (users, groups, or service accounts), and a reference to the role being granted. A RoleBinding grants permissions within a specific namespace whereas a ClusterRoleBinding grants that access cluster-wide.

8. Annotation

Annotations are key-value pairs that provide additional metadata or information about Kubernetes resources.

- Unlike labels, annotations are not used to identify or select objects, but rather to add non-identifying metadata to objects.

- Annotations are often used to attach arbitrary configuration or descriptive information to resources, which can be used by tools, controllers, or operators to make decisions or perform actions.

- Annotations can be added to most Kubernetes resources, including pods, services, deployments, ingress resources, etc.

- Common use cases for annotations include storing build information, specifying monitoring configurations, adding deployment notes, defining traffic routing rules, etc

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-deployment

annotations:

my-company.com/build-number: "1234"

spec:

replicas: 3

selector:

matchLabels:

app: my-app

template:

metadata:

labels:

app: my-app

spec:

containers:

- name: my-container

image: my-image:latest